Hopfield Networks for dummies

Introduction to Hopfield Networks

Hopfield Networks, a type of Recurrent Neural Network (RNN), are renowned for their unique ability to store and retrieve patterns through associative memory. This means they can recall a complete memory from just a partial input. Inspired by the Ising model in physics, which explains magnetic behaviors in certain materials, Hopfield Networks use a system of interconnected neurons, each able to be in one of two states, akin to magnetic dipoles. These neurons are fully connected, each influencing the other based on the strength of their connections. The network dynamically evolves to a stable state where the system’s ‘energy’ is minimized, representing a memory. The overall network state thus can represent binary information, like an image or a pattern, making Hopfield Networks particularly effective in pattern recognition and completion tasks.

Hopfield Networks is all you need

Now with a little bit of more context of Hopfield Networks’s we will dive into a quick summary of the Paper. To put it simply the main message of the paper is the following: By introducing a modern Hopfield Network with continuous states we can store exponentially many patterns, retrieve them with only one update and the update rule is equivalent to the attention mechanism used in transformers.

Now let’s dive little deep into the details. The basic architecture is on

The evolution of Hopfield Networks towards modern architectures involves a transition from binary to continuous neuron states, allowing for more complex and nuanced representations. This advancement closely aligns with the mechanisms used in transformer models in deep learning. The modern approach introduces a new energy function to accommodate these continuous states, fundamentally changing the network dynamics. The update rule in these modern networks parallels the attention mechanism in transformer and BERT models, marking a significant innovation. Modern Hopfield Networks not only enhance the capacity for pattern storage and retrieval but also exhibit improved efficiency in these processes. The research also presents several theorems to explain the behavior of these networks, including their convergence, storage capacity, pattern retrieval efficiency, and error rates. These networks extend beyond mimicking transformer attention, offering potential applications in a wide range of deep learning architectures and providing new tools for designing advanced neural network systems.

The paper introduces 5 theorems to characterize the introduced hopfield network.

-

Theorem 1: It states that the update rule for these networks converges globally. This means for a given sequence of states, the energy will eventually converge to a stable value at a fixed point, even as time tends to infinity. The proof involves the Concave-Convex Procedure (CCCP) to ensure this global convergence.

-

Theorem 2: This theorem extends Theorem 1, stating that not only does the energy converge, but the sequence of states either converges or its limit points form a connected and compact set of stationary points. If this set is finite, the sequence will converge to a specific stationary point.

-

Theorem 3: This theorem discusses the storage capacity of the network. It defines conditions under which a certain number of random patterns can be stored with a high probability. The calculations involve complex functions like the Lambert W function and take into account the dimensions of the pattern space and a failure probability.

-

Theorem 4: It states that the network is capable of retrieving patterns efficiently with just one update, particularly if the patterns are well-separated. The theorem quantifies the retrieval capability in terms of the separation of patterns.

-

Theorem 5: This theorem addresses the retrieval error, which is found to be exponentially small relative to the pattern separation. It provides bounds on this error, demonstrating the effectiveness of the network in accurately retrieving patterns.

Finally it is interesting to note the following.

-

In modern Hopfield networks, the dynamics of pattern convergence are

characterized by:

-

If patterns xi are not well separated, the iteration converges to a global fixed point near the average of all vectors.

-

In cases where some patterns are similar yet distinct from others, a metastable state near these patterns is formed. Iterations converge to this metastable state.

-

-

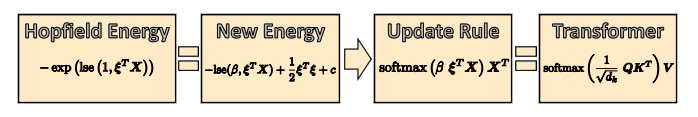

Hopfield Update Rule as Transformer Attention

-

The Hopfield network update rule parallels the attention mechanism in transformer and BERT models.

-

With stored (key) patterns yi and state (query) patterns ri, and using transformations with weight matrices WK, WQ, and WV, the update rule resembles transformer attention.

-

The equivalence is shown in the formulation of transformer self-attention, where modifications in weight matrices and softmax function applications are highlighted.

-

- Applications in Deep Network Architectures Hopfield networks extend beyond the attention mechanism, offering additional functionalities in deep learning architectures through specific layers.